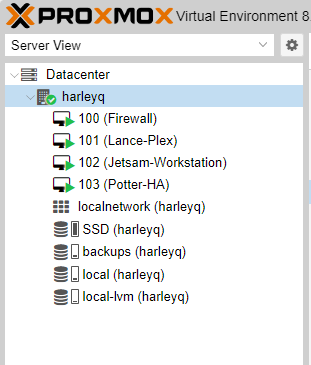

OVH weird Grub / EFI custom setup issues - Proxmox

After a recent PVE 8 to 9 upgrade I experienced some boot issues with OVH/SYS/KI servers. It turns out that OVH do some weird stuff with boot disks in the initial setup. The issue and fix is discussed in more detail in the following proxmox post, but I wanted to copy the scripts here in case the forum ever disappears.

This script written by a very kind engineer at OVH fixes the sync issue for a one off fix when grub is updated.

While this fixes the immediate issue, it does nothing to put a permanent fix in place. OVH are rolling out a new config for new builds that doesn’t experience the issue, so the easiest fix is to rebuild your dedicated server, however, if this isn’t for you the same engineer wrote the following to put the server into a more standard configuration.#!/bin/bash set -euo pipefail overall_newest_mtime=0 while read -r partition; do mountpoint=$(mktemp -d) mount "${partition}" "${mountpoint}" newest_mtime=$(find "${mountpoint}" -type f -printf "%T@\n" | cut -d. -f1 | sort -n | tail -n1) if [[ $newest_mtime -gt $overall_newest_mtime ]]; then overall_newest_mtime=${newest_mtime} newest_esp=${partition} echo "${partition} is currently the newest ESP with a file modified at $(date -d @"${newest_mtime}" -Is)" fi umount "${mountpoint}" rmdir "${mountpoint}" done < <(blkid -o device -t LABEL=EFI_SYSPART) newest_esp_mountpoint=$(mktemp -d) mount "${newest_esp}" "${newest_esp_mountpoint}" while read -r partition; do if [[ "${partition}" == "${newest_esp}" ]]; then continue fi echo "Copying data from ${newest_esp} to ${partition}" mountpoint=$(mktemp -d) mount "${partition}" "${mountpoint}" rsync -ax "${newest_esp_mountpoint}/" "${mountpoint}/" umount "${mountpoint}" rmdir "${mountpoint}" done < <(blkid -o device -t LABEL=EFI_SYSPART) umount "${newest_esp_mountpoint}" rmdir "${newest_esp_mountpoint}" echo "Done synchronizing ESPs"

#!/bin/bash

# DISCLAIMER:

# This script is provided "as is" with no guarantees. Use at your own risk.

# The author takes no responsibility for any data loss, system breakage,

# or other damage that may occur from running it.

set -euo pipefail

ESP_BACKUP_PATH="/root/efi_system_partition_data"

### Ensure required commands are installed

for command in mkfs.vfat rsync; do

if ! command -v "${command}" >&/dev/null; then

echo "Could not find ${command}, make sure it is installed" >&2

exit 1

fi

done

### Ensure LABEL=EFI_SYSPART returns something

if [[ ! $(blkid -o device -t LABEL=EFI_SYSPART) ]]; then

echo "LABEL=EFI_SYSPART returned nothing, aborting" >&2

exit 1

fi

# Exit if the ESP is already over RAID1

if [[ $(blkid -o device -t LABEL=EFI_SYSPART | xargs lsblk -ndo TYPE) == raid1 ]]; then

echo "The ESP is already over RAID1, nothing to do"

exit 0

fi

### Ensure LABEL=EFI_SYSPART only points to partitions and not a broken mix of part/raid1

if [[ $(blkid -o device -t LABEL=EFI_SYSPART | xargs lsblk -ndo TYPE | sort -u) != part ]]; then

echo "LABEL=EFI_SYSPART returns multiple device types, aborting" >&2

exit 1

fi

### Don't do anything if there is only one ESP

if [[ $(blkid -o device -t LABEL=EFI_SYSPART | wc -l) == 1 ]]; then

echo "Only one ESP, nothing to do"

exit 0

fi

### Unmount /boot/efi

if mountpoint -q /boot/efi; then

umount "/boot/efi"

echo "Unmounted /boot/efi"

# If the script is running on the installed OS (not on a rescue system), we

# need to unmount /boot/efi and remount it at the end of the script

esp_mountpoint=/boot/efi

else

# If the script is running on a system where /boot/efi is not mounted, it's

# likely a rescue system so we'll use a temporary mountpoint

esp_mountpoint=$(mktemp -d)

fi

### Find the newest ESP

overall_newest_mtime=-1

while read -r partition; do

mountpoint=$(mktemp -d)

mount -o ro "${partition}" "${mountpoint}"

newest_mtime=$(find "${mountpoint}" -type f -printf "%T@\n" | cut -d. -f1 | sort -n | tail -n1)

if [[ ${newest_mtime} -gt ${overall_newest_mtime} ]]; then

overall_newest_mtime=${newest_mtime}

newest_esp=${partition}

fi

umount "${mountpoint}"

rmdir "${mountpoint}"

if findmnt --source "${partition}" >/dev/null; then

echo "${partition} is still mounted somewhere, aborting" >&2

exit 1

fi

done < <(blkid -o device -t LABEL=EFI_SYSPART)

echo "${newest_esp} is the newest ESP with a file modified at $(date -d @"${overall_newest_mtime}" -Is)"

### Copy ESP contents

newest_esp_mountpoint=$(mktemp -d)

mount -o ro "${newest_esp}" "${newest_esp_mountpoint}"

mkdir -p "${ESP_BACKUP_PATH}"

rsync -aUx "${newest_esp_mountpoint}/" "${ESP_BACKUP_PATH}/files/"

echo "Copied newest ESP contents to ${ESP_BACKUP_PATH}/files/"

umount "${newest_esp_mountpoint}"

rmdir "${newest_esp_mountpoint}"

### Back up and wipe ESPs

partitions=()

while read -r partition; do

backup_file="${ESP_BACKUP_PATH}/$(basename "${partition}")"

# Create a full backup of the ESP, this uses roughly 511 MiB per ESP

dd if="${partition}" of="${backup_file}" status=none

echo "Backed up ${partition} as ${backup_file}"

echo "Wiping signatures from ${partition}"

wipefs -aq "${partition}"

echo "Wiped signatures from ${partition}"

partitions+=("${partition}")

done < <(blkid -o device -t LABEL=EFI_SYSPART)

### Create ESP over RAID1

# Prefer md0 if possible

if [[ ! -e /dev/md0 ]]; then

md_number=0

else

highest_md_number=$(find /dev/ -mindepth 1 -maxdepth 1 -regex '^/dev/md[0-9]+$' -printf "%f\n" | sed "s/^md//" | sort -n | tail -n 1)

md_number=$((highest_md_number + 1))

fi

raid_device="/dev/md${md_number}"

echo "Creating RAID1 device ${raid_device}"

# With metadata version 0.90, the superblock is located at the end of the partition, so the firmware sees it as a normal FAT filesystem

# https://archive.kernel.org/oldwiki/raid.wiki.kernel.org/index.php/RAID_superblock_formats.html

mdadm --create --verbose "${raid_device}" --level=1 --metadata=0.90 --bitmap=internal --raid-devices="${#partitions[@]}" "${partitions[@]}"

echo "Creating FAT filesystem on ${raid_device}"

mkfs.vfat -n EFI_SYSPART "${raid_device}" >/dev/null

mount "${raid_device}" "${esp_mountpoint}"

echo "Copying ESP contents to ${raid_device} mounted on ${esp_mountpoint}"

rsync -aUx "${ESP_BACKUP_PATH}/files/" "${esp_mountpoint}/"

echo "The ESP is now over RAID1:"

lsblk -s "${raid_device}"

if [[ ${esp_mountpoint} != /boot/efi ]]; then

echo "Unmounting ${esp_mountpoint}"

umount "${esp_mountpoint}"

rmdir "${esp_mountpoint}"

fi

echo "Script completed successfully, please check the /boot/efi entry in /etc/fstab, update mdadm.conf and rebuild the initramfs"

Source: Convert EFI System Partitions to RAID1 on OVHcloud bare-metal servers · GitHub